Databricks Connector

Updated at January 24th, 2024

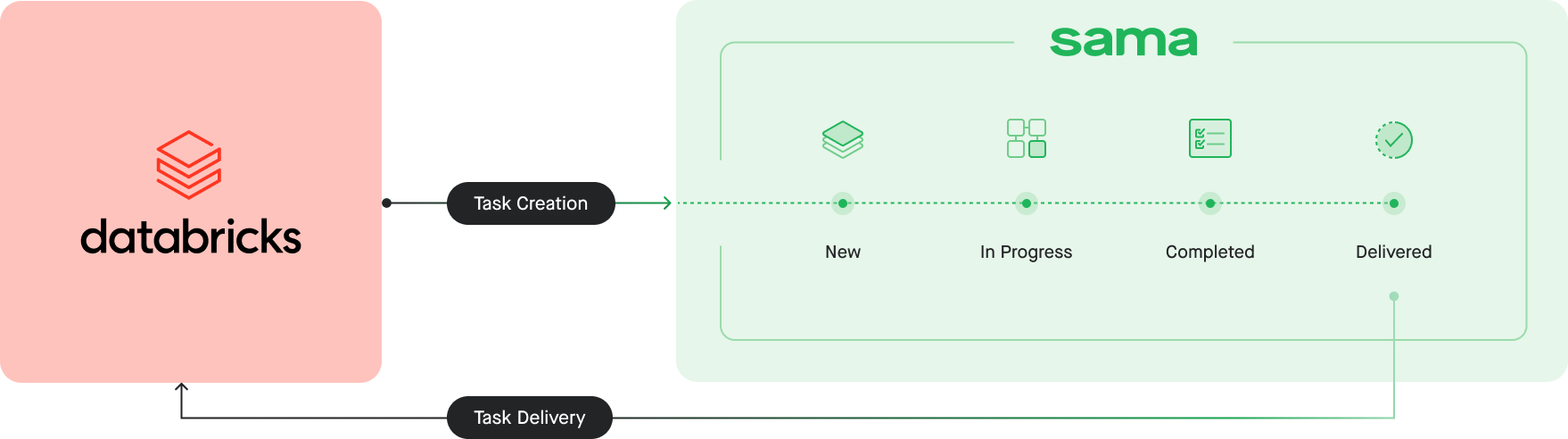

The Sama Databricks Connector enables you to quickly create, monitor, and get annotated tasks right from Databricks.

Requirements

- Databricks: Runtime 10.4 LTS or later

- Sama Platform account

Installation

Install the Sama SDK and Databricks connector using the following command in your Databricks workbook.

%pip install sama

from sama.databricks import ClientConfigure the SDK

You need to specify:

- Your API Key

- Your Sama Project ID

Your project manager will provide you with the correct Project ID(s) and they will have configured all the necessary Sama Project inputs and outputs.

# Set your Sama API KEY

API_KEY: str = ""

# Set your project ID

PROJECT_ID: str = ""

if not(API_KEY):

raise ValueError("API_KEY not set")

if not(PROJECT_ID):

raise ValueError("PROJECT_ID not set")

client = Client(API_KEY)

client.get_project_information(PROJECT_ID) # Verify config by calling Get Project Information endpoint. Throws exception if PROJECT_ID or API_KEY not valid.Usage with Databricks and Spark Dataframes

Once you are set up and properly configured, you can start using functions that accept or return Spark Dataframes:

-

create_task_batch_from_table()- create tasks, using data from a Dataframe, in the Sama Platform to be picked up by the annotators and quality teams. -

get_delivered_tasks_to_table()orget_delivered_tasks_since_last_call_to_table()- get delivered tasks, into a Dataframe, which have been annotated and reviewed by our quality team. -

get_multi_task_status_to_table()orget_task_status_to_table()- get annotation statuses of tasks, into a Dataframe.

Tutorials

Please use our Jupyter Notebook tutorial to create sample tasks from a Dataframe and get delivered tasks into a Dataframe.

Python SDK and Databricks Connector Reference

Other functions available in the Python SDK include:

- Retrieving task and delivery schemas

- Checking the status of and cancelling batch creation jobs

- Updating task priorities

- Rejecting and deleting tasks

- Obtaining project stats and information